from D3adC0r3

Test post does this work?

Read the latest posts from Infosec Press.

from D3adC0r3

Test post does this work?

from critic

Ormai è una moda ma personalmente non capisco il senso, soprattutto per il runner basic, di confrontarsi su una piattaforma che essenzialmente vuole i tuoi dati per profilarti.

from A&B Vietnam

Vị ngon bia Việt Nam ngày một ghi dấu ấn mạnh mẽ trên bản đồ đồ uống toàn cấu nhờ chất lượng vượt trội. Trong đó, A&B Vietnam nổi lên như một công ty tiên phong với hơn 35 năm kinh nghiệm, vận hành hệ thống cơ sở sản xuất 70.000 m² và công suất hơn 1000 container/tháng. A&B Vietnam đã giao thương quốc tế bia tới hơn 70 quốc gia, đáp ứng trọn vẹn các điều kiện bắt buộc khắt khe như ISO, HACCP, HALAL, FDA. Hãy cùng tìm hiểu chi tiết hơn về các dòng bia mang tinh thần Việt Nam và độ hoàn hảo toàn cầu của A&B Vietnam.

Chi tiết: Bia xuất khẩu giá tốt nhất - A&B Vietnam

Bia là một thức uống có cồn quen thuộc, được điều chế từ thủ tục sản xuất lên men các vật tư sản xuất như malt đại mạch, hoa bia, men và nước. Đây là một trong những loại đồ uống có bề dày lịch sử, gắn bó mật thiết với nghệ thuật ẩm thực của nhiều dân tộc.

Tại A&B Vietnam, bia đưa ra thị trường thế giới không chỉ là một thức uống giải khát, mà còn là một chủng loại thể hiện bản sắc văn hóa Việt với chất lượng cao quốc tế. A&B Vietnam luôn đề cao sự cân bằng trong mùi vị, độ tươi mát, lộ trình công nghệ tạo ra khép kín và các điều kiện bắt buộc quốc tế. Nhờ đó, từ dòng lager thanh khiết, ale đậm vị đến bia không cồn 0.0%, mỗi sản phẩm đều có nét độc đáo riêng, thỏa mãn nhu cầu đa dạng tại các nơi bán hàng khác nhau.

Với tầm nhìn trở thành nhà làm ra và tiêu thụ ở nước ngoài bia hàng đầu khu vực, A&B Vietnam đã xây dựng một danh mục vật phẩm hết sức phong phú, phục vụ thị hiếu khác biệt của người tiêu dùng quốc tế.

Mỗi dòng bia đều được gia công trên dây chuyền tối tân, tuân thủ nghiêm ngặt điều kiện bắt buộc quốc tế, vừa bảo toàn mùi vị Việt Nam, vừa khẳng định độ hoàn hảo phân phối quốc tế uy tín.

Để tạo ra những dòng hàng bia có vị đặc trưng ổn định và độ hoàn hảo, A&B Vietnam luôn đặc biệt chú trọng từ khâu tuyển chọn thành phần cốt lõi đến chu trình gia công. Chỉ những yếu tố đầu vào tốt nhất mới được đưa vào dây chuyền nhằm đảm bảo vị đặc trưng chuẩn mực.

thủ tục sản xuất điều chế bia bao gồm 11 bước khép kín, từ chuẩn bị thành phần cốt lõi, nấu và đường hóa, đến lên men, lọc trong và đóng gói. Toàn bộ đều được giám sát chặt chẽ theo các thông số kỹ thuật ISO 22000, HACCP, HALAL, FDA, đảm bảo mỗi lon bia khi tiêu thụ ở nước ngoài đều đạt giá trị toàn cầu.

Để phục vụ nhu cầu đa dạng của các nhà nhập khẩu và hệ thống cung ứng quốc tế, A&B Vietnam tiêu thụ nhiều lựa chọn về vỏ đựng. Mỗi thiết kế đều được tối ưu hóa cho sự tiện lợi, khả năng bảo quản và lý tưởng với từng kênh cung ứng.

Nhờ sự đa dạng này, hàng hóa bia của A&B Vietnam không chỉ thuận tiện trong khâu nhập khẩu và cung cấp mà còn tạo được ấn tượng chuyên nghiệp với người tiêu dùng tại nhiều nơi bán hàng toàn cầu.

Với năng suất điều chế mạnh mẽ và mạng lưới bán hàng toàn cầu, A&B Vietnam đã đưa các thương hiệu bia Việt Nam như Camel, Cheetah, Abest, Saola, Steen, Two Red Tigers đến nhiều khu vực quan trọng. A&B Vietnam luôn nghiên cứu kỹ lưỡng đặc thù của mỗi kênh phân phối để mang đến hàng sản xuất tối ưu nhất.

Với năng lực cung ứng hơn 1000 container mỗi tháng, A&B Vietnam không chỉ đáp ứng tốt nhu cầu ổn định mà còn sẵn sàng mở rộng thị phần. Đây chính là nền tảng để chúng tôi trở thành bên hợp tác tiêu thụ ở nước ngoài bia chiến lược cho nhiều doanh nghiệp.

Trong tình hình cạnh tranh khốc liệt, việc lựa chọn một bên hợp tác chế tác và bán ra nước ngoài uy tín là yếu tố quyết định thành công. A&B Vietnam, với kinh nghiệm, nền tảng làm ra hiện đại và giá trị quốc tế, đã trở thành cái tên đáng tin cậy.

Với sự kết hợp giữa bề dày kinh nghiệm, công nghệ hiện đại và đảm bảo đẳng cấp, A&B Vietnam không chỉ cung cấp những hàng sản xuất bia đạt chuẩn mà còn đồng hành cùng người đồng hành chinh phục kênh phân phối toàn cầu. Hãy gọi ngay với A&B Vietnam để cùng nhau đưa vị đặc trưng bia Việt Nam vươn xa.

A&B Vietnam

from b

“In June 2019, Portland Antifa terrorists were arrested after assaulting rightwing demonstrators and police with quick drying cement and bear spray.” – The White House

PPB’s baseless lie from 6 years ago is being used as pretext for an authoritarian crackdown against Portlanders, as well as decent, working class volunteers who oppose fascists and neo-Nazis all over the USA.

At any point, PPB could have displayed a minimal commitment to truth and civic responsibility by retracting this bullshit; but, even as their own Police Commissioner & Mayor Ted Wheeler’s City Hall was evacuated due to bomb threats, they never did.

PPB consistently displays far-right malice toward the people of PDX, and are too rarely held to account for it. Nobody should be under the illusion that they’re going to be helpful against Trump’s current fascist military deployment.

from 📰wrzlbrmpft's cyberlights💥

A weekly shortlist of cyber security highlights. The short summaries are AI generated! If something is wrong, please let me know!

🚗 Stellantis says a third-party vendor spilled customer data data breach – Stellantis confirms a data leak due to a third-party vendor breach, exposing customer names and emails. They have initiated an investigation and warned customers about potential phishing risks. https://www.theregister.com/2025/09/22/stellantis_breach/

⚠️ FBI alerts public to spoofed IC3 site used in fraud schemes cybercrime – The FBI warns of spoofed IC3 websites designed to steal personal information from users reporting cybercrimes. Users should verify URLs carefully to avoid falling victim to fraud. https://securityaffairs.com/182449/cyber-crime/fbi-alerts-public-to-spoofed-ic3-site-used-in-fraud-schemes.html

🦠 Here’s how potent Atomic credential stealer is finding its way onto Macs malware – Malicious ads impersonate services like LastPass to spread Atomic Stealer on Macs. Users are warned to avoid clicking ads and to download software only from official websites. https://arstechnica.com/security/2025/09/potent-atomic-credential-stealer-can-bypass-gatekeeper/

🎮 Steam game removed after cryptostealer takes over $150K malware – A Steam game was pulled after a cryptostealer exploited it, stealing over $150,000 from users. The incident highlights the ongoing risks of malware in gaming platforms. https://www.theverge.com/news/782993/steam-blockblasters-crypto-scam-malware

😩 AI ‘Workslop’ Is Killing Productivity and Making Workers Miserable privacy – A study reveals that AI-generated content, termed 'workslop', burdens workers with fixing low-quality outputs, undermining productivity rather than enhancing it. Companies struggle to define AI's benefits amid rising risks. https://www.404media.co/ai-workslop-is-killing-productivity-and-making-workers-miserable/

🚧 Jaguar Land Rover extends shutdown again following cyberattack data breach – Jaguar Land Rover's operations remain halted due to a cyberattack, with losses estimated at £50-70 million daily. The shutdown affects thousands of workers and disrupts the broader supply chain. https://therecord.media/jaguar-land-rover-extends-shutdown-again-cyberattack

🧳 Worried About Phone Searches? 1Password’s Travel Mode Can Clean Up Your Data privacy – 1Password’s Travel Mode helps protect your data during phone searches by removing sensitive information temporarily. This feature is ideal for travelers concerned about privacy. https://www.wired.com/story/1password-travel-mode/

⚖️ What to do if your company discovers a North Korean worker in its ranks cyber defense – Companies discovering North Korean IT workers face complex legal and cybersecurity challenges. Experts advise cooperation with the workers, careful monitoring, and engaging law enforcement to mitigate risks. https://cyberscoop.com/north-korean-it-workers-enterprise-risks-sanctions-response/

📰 Researchers say media outlet targeting Moldova is a Russian cutout security research – Researchers link the online news outlet REST Media to the Russian disinformation group Rybar, revealing its role in influencing Moldova's elections through deceptive tactics and social media. https://cyberscoop.com/researchers-say-media-outlet-targeting-moldova-is-russian-cutout/

💰 Feds Tie ‘Scattered Spider’ Duo to $115M in Ransoms – Krebs on Security cybercrime – U.S. prosecutors charged Thalha Jubair and Owen Flowers, members of the Scattered Spider group, with hacking and extorting over $115 million. Their operations involved significant cyberattacks against major retailers and transport systems. https://krebsonsecurity.com/2025/09/feds-tie-scattered-spider-duo-to-115m-in-ransoms/

🚓 ‘Find My Parking Cops’ Tracks Officers Handing Out Tickets All Around San Francisco privacy – Riley Walz created 'Find My Parking Cops,' a site that maps San Francisco parking officers issuing tickets, helping users avoid fines. The city responded by altering access to public data. https://www.404media.co/find-my-parking-cops-tracks-officers-handing-out-tickets-all-around-san-francisco/

✈️ UK arrests man in airport ransomware attack that caused delays across Europe security news – A man was arrested in connection with a ransomware attack affecting multiple European airports, causing significant flight delays. The attack targeted the MUSE software, with reports suggesting simple ransomware tools were used. https://www.theverge.com/news/784786/uk-nca-europe-airport-cyberattack-ransomware-arrest

🔒 Volvo North America disclosed a data breach following a ransomware attack on IT provider Miljödata data breach – A ransomware attack on supplier Miljödata exposed personal data of Volvo North America employees, including names and Social Security numbers. Volvo is offering affected individuals 18 months of identity protection services. https://securityaffairs.com/182577/data-breach/volvo-north-america-disclosed-a-data-breach-following-a-ransomware-attack-on-it-provider-miljodata.html

🚨 Cybercrooks publish toddlers' data in 'reprehensible' attack data breach – The Radiant Group targeted Kido International, leaking sensitive data of toddlers and their parents, including names and addresses. Experts condemned the attack as a severe moral low for cybercriminals. https://www.theregister.com/2025/09/25/ransomware_gang_publishes_toddlers_images/

☁️ DOGE might be storing every American’s SSN on an insecure cloud server privacy – Senate Democrats report that DOGE has transferred sensitive information, potentially including Social Security numbers, to a cloud server, raising concerns about catastrophic security risks. https://www.theverge.com/news/785706/doge-insecure-cloud-server-social-security-numbers

🔒 Viral call-recording app Neon goes dark after exposing users' phone numbers, call recordings, and transcripts data breach – The call-recording app Neon has been taken offline after a security flaw exposed users' phone numbers, call recordings, and transcripts. The founder announced the shutdown while failing to address the security lapse. https://techcrunch.com/2025/09/25/viral-call-recording-app-neon-goes-dark-after-exposing-users-phone-numbers-call-recordings-and-transcripts/

🤖 Researchers expose MalTerminal, an LLM malware – MalTerminal is the first known malware using LLM technology to create malicious code dynamically, complicating detection for defenders. Researchers highlight the evolving threat landscape with LLM-integrated attacks. https://securityaffairs.com/182433/malware/researchers-expose-malterminal-an-llm-enabled-malware-pioneer.html

⚖️ Modern Solution: Bundesverfassungsgerich bestätigt – Wegsehen ist sicherer als Aufdecken security news – Germany's courts penalize a security expert for exposing a major vulnerability in e-commerce software instead of holding the developer accountable, undermining responsible disclosure and IT security. https://www.kuketz-blog.de/modern-solution-bundesverfassungsgerich-bestaetigt-wegsehen-ist-sicherer-als-aufdecken/

💰 $150K awarded for L1TF Reloaded exploit that bypasses cloud mitigations vulnerability – Researchers earned $150K for exploiting L1TF Reloaded, leaking VM memory from public clouds despite mitigations. The attack demonstrates ongoing risks from transient CPU vulnerabilities. https://securityaffairs.com/182476/security/150k-awarded-for-l1tf-reloaded-exploit-that-bypasses-cloud-mitigations.html

📞 Secret Service says it dismantled extensive telecom threat in NYC area cybercrime – The Secret Service disrupted a telecom network in NYC, uncovering 300 servers and 100,000 SIM cards used for encrypted communications by threat actors. Concerns about potential disruptions during the U.N. General Assembly were raised. https://cyberscoop.com/secret-service-dismantles-nyc-telecom-threat-un-general-assembly/

🔓 Bypassing Mark of the Web (MoTW) via Windows Shortcuts (LNK): LNK Stomping Technique hacking write-up – The LNK Stomping technique exploits Windows shortcuts to bypass security checks by manipulating file metadata, allowing attackers to execute malicious payloads undetected. This method highlights the evolving nature of cyber threats. https://asec.ahnlab.com/en/90299/

⚠️ Critical Vulnerability in SolarWinds Web Help Desk vulnerability – SolarWinds disclosed a critical vulnerability (CVE-2025-26399) in its Web Help Desk, allowing unauthenticated remote code execution. Users are urged to update to the latest version immediately. https://cert.europa.eu/publications/security-advisories/2025-034/

🛡️ EDR Bypass Technique Uses Windows Functions to Put Antivirus Tools to Sleep security research – The EDR-Freeze technique allows attackers to bypass endpoint detection and response (EDR) tools by using Windows functions to suspend antivirus processes without installing vulnerable drivers. This new method enhances evasion tactics for threat actors. https://thecyberexpress.com/edr-bypass-technique-disables-antivirus/

⚠️ High Vulnerability in Cisco IOS and IOS XE Software warning – Cisco reported a high-severity vulnerability (CVE-2025-20352) in its IOS and IOS XE software SNMP subsystem, allowing remote code execution or denial of service. Immediate updates and security assessments are recommended. https://cert.europa.eu/publications/security-advisories/2025-035/

⚠️ Worries mount over max-severity GoAnywhere defect vulnerability – Concerns grow over a high-severity vulnerability (CVE-2025-10035) in GoAnywhere MFT, with evidence of active exploitation. Researchers criticize Forta for lack of transparency regarding the vulnerability's status. https://cyberscoop.com/goanywhere-vulnerability-active-exploitation-september-2025/

🔐 Critical Vulnerabilities in Cisco ASA and FTD warning – Cisco disclosed critical vulnerabilities (CVE-2025-20333, CVE-2025-20363, CVE-2025-20362) in its ASA and FTD software, allowing remote code execution. Immediate updates and compromise assessments are recommended. https://cert.europa.eu/publications/security-advisories/2025-036/

🔒 SonicWall Releases Advisory for Customers after Security Incident security news – SonicWall alerts customers about a security incident where brute force attacks accessed cloud backup files. Users are urged to verify their account and follow guidance to secure their devices. https://www.cisa.gov/news-events/alerts/2025/09/22/sonicwall-releases-advisory-customers-after-security-incident 🔍 CISA Shares Lessons Learned from an Incident Response Engagement cyber defense – CISA's response to a cyber incident revealed critical vulnerabilities exploited via CVE 2024-36401. Key lessons include the importance of timely patching and robust incident response plans. https://www.cisa.gov/news-events/cybersecurity-advisories/aa25-266a 🤞 CISA Directs Federal Agencies to Identify and Mitigate Potential Compromise of Cisco Devices security news – CISA issued Emergency Directive ED 25-03, urging federal agencies to address vulnerabilities in Cisco ASA and Firepower devices. Agencies must identify affected devices and transmit memory files for analysis by September 26. https://www.cisa.gov/news-events/alerts/2025/09/25/cisa-directs-federal-agencies-identify-and-mitigate-potential-compromise-cisco-devices

⚠️ CISA Adds One Known Exploited Vulnerability to Catalog warning – CISA has included CVE-2025-10585, a Google Chromium V8 Type Confusion Vulnerability, in its KEV Catalog due to active exploitation risks. Federal agencies must remediate identified vulnerabilities promptly. https://www.cisa.gov/news-events/alerts/2025/09/23/cisa-adds-one-known-exploited-vulnerability-catalog

⚙️ Dingtian DT-R002 vulnerability – Dingtian DT-R002 relay boards have critical vulnerabilities (CVE-2025-10879 and CVE-2025-10880) that allow unauthorized retrieval of credentials. Users are urged to restrict access and enhance security measures. https://www.cisa.gov/news-events/ics-advisories/icsa-25-268-01 ⚙️ CISA Releases Six Industrial Control Systems Advisories vulnerability – CISA issued six advisories detailing vulnerabilities in various Industrial Control Systems, including AutomationDirect and Mitsubishi Electric. Users are urged to review for mitigation strategies. https://www.cisa.gov/news-events/alerts/2025/09/23/cisa-releases-six-industrial-control-systems-advisories

While my intention is to pick news that everyone should know about, it still is what I think is significant, cool, fun... Most of the articles are in English, but some current warnings might be in German.

from Bruno's ramblings

Five years later, we finally had proper vacations! 🎉 We chose the northern interior part of Alentejo because we had a voucher that covered most of the hotel cost, and we could use it to spend a few nights in the monastery of Crato, a breathtaking place that looks more like a castle. I highly recommend it if you want to spend some days in that area and can afford the prices. It even has a museum there that you can visit.

We only ended up staying one night because Chico, our cat, has some abandonment trauma, and we knew he would be stressed out while we were away. He had my parents to look out for him, and he's very comfortable around them, but he spent most of the time in the downstairs living room, where he usually spends very little time, switching between couches, waiting for us to come home, sometimes crying, and barely eating. 😿

Even if we only had two days to visit the area, and we wanted to go to the villages of Castelo de Vide and Marvão, we managed to find some spots with stunning views! 🤩 Some were plains, some were mountainous areas, but all were chef's kiss.

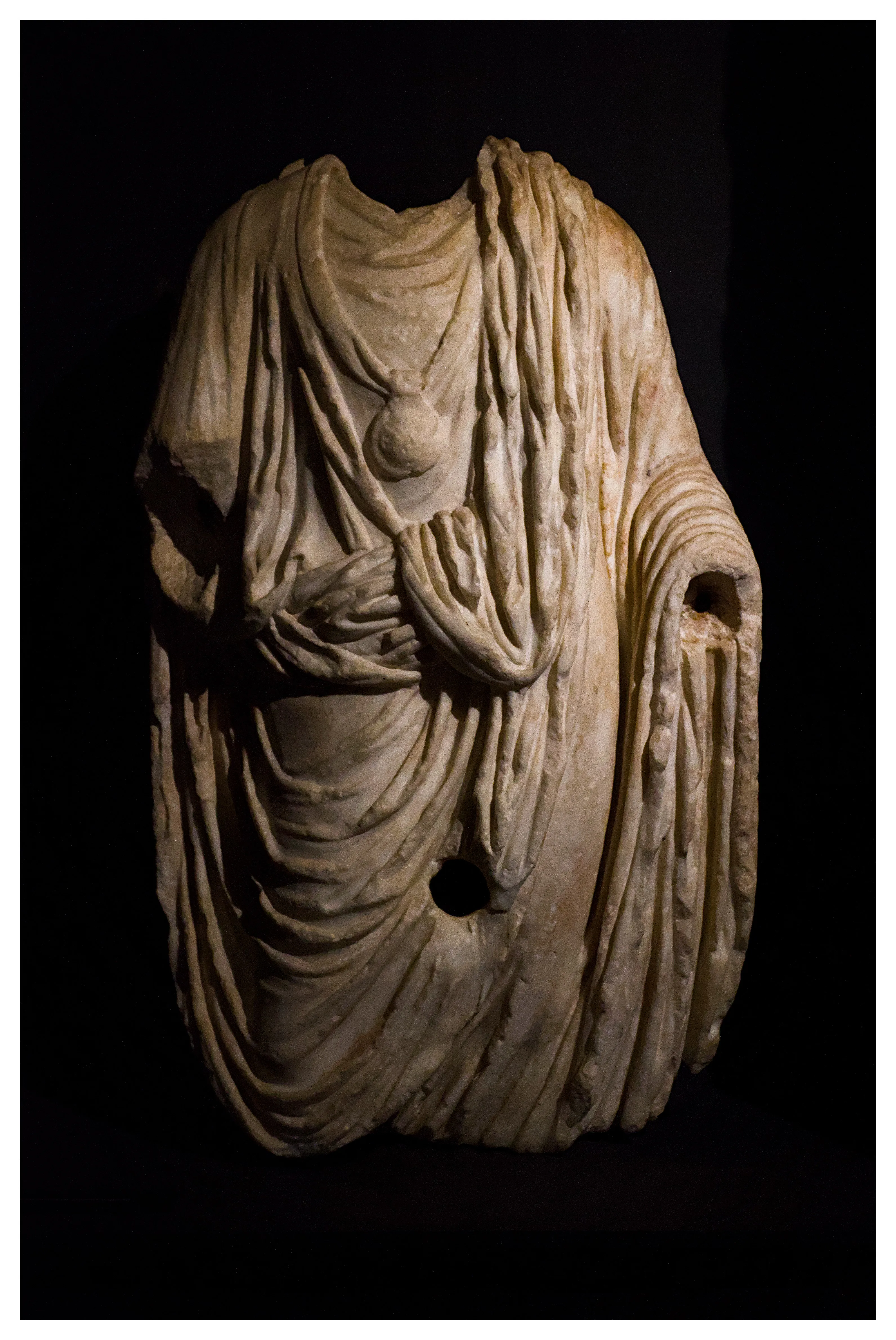

The Roman Museum of Ammaia, in Marvão, is also worth a visit. The outside part is bare, but it has some cool stuff in the interior exhibit. It's just a shame I couldn't touch anything behind glass. 😭 I wanted to touch an amphora and some coins so much! I'm not joking!

We did have issues finding a place to eat. Most of the restaurants in the center of each village were closed, even some that were suggested to me, and the few they had open had long queues. It's better to try and find something in the outskirts of the villages; you'll have slightly more options and far shorter waiting queues, if any.

Overall, even if exhausting, especially in Marvão, where you have to walk on foot to go up to the castle, you have several cool places to visit.

Speaking of exhausting, I was cursing so much as I was walking to Marvão's castle, because it was making my legs feel like they were being ripped apart, that several other tourists, especially the Portuguese and Spanish ones, looked at me in a bit of a shock. That actually ended up making me laugh and helped me slightly distract from that insane pain.

This was out of character for me because, in a normal situation, I wouldn't subject myself to this. But the wife was super excited about this, so I chose to try and tough it up, knowing I would pay for it later. Well, I'm paying for it hard, but I don't regret it. Even I needed this!

All the photos here are mine. You can use them under the CC0 License.

#CasteloDeVide #Marvão #Alentejo #Vacations

from 📰wrzlbrmpft's cyberlights💥

A weekly shortlist of cyber security highlights. The short summaries are AI generated! If something is wrong, please let me know!

✈️ Airlines Sell 5 Billion Plane Ticket Records to the Government For Warrantless Searching privacy – Major airlines are selling billions of ticket records to the government for warrantless monitoring, raising significant privacy concerns about surveillance of individuals' movements. https://www.404media.co/airlines-sell-5-billion-plane-ticket-records-to-the-government-for-warrantless-searching/

🔑 Password Security Part 2: The Human Factor – Password Patterns and Weaknesses cyber defense – Human behavior leads to predictable password patterns that compromise security. Organizations can mitigate risks through password policies, filtering, and multi-factor authentication, while credential audits reveal weaknesses. https://www.guidepointsecurity.com/blog/password-security-part-2-human-factor-patterns-weaknesses/

💼 Hackers steal millions of Gucci, Balenciaga, and Alexander McQueen customer records data breach – Hackers, identified as Shiny Hunters, stole personal data of millions from luxury brands Gucci, Balenciaga, and Alexander McQueen, including names and contact details, raising concerns about targeted scams. https://securityaffairs.com/182236/cyber-crime/hackers-steal-millions-of-gucci-balenciaga-and-alexander-mcqueen-customer-records.html

🦠 FileFix attacks trick victims into executing infostealers malware – The FileFix attack tricks victims into executing malware by posing as a Facebook security alert, leading to the installation of the StealC infostealer. This method has surged in popularity, emphasizing the need for improved anti-phishing training. https://www.theregister.com/2025/09/16/filefix_attacks_facebook_security_alert/

🤖 Millions turn to AI chatbots for spiritual guidance and confession privacy – Tens of millions are using AI chatbots for spiritual advice, with apps gaining popularity for their accessibility. However, concerns arise over their accuracy, privacy, and the nature of their responses. https://arstechnica.com/ai/2025/09/millions-turn-to-ai-chatbots-for-spiritual-guidance-and-confession/

🛡️ OpenAI to predict ages in bid to stop ChatGPT from discussing self harm with kids privacy – OpenAI is implementing age prediction and identity verification systems to protect minors after a lawsuit linked its chatbot to a teenager's suicide. The company prioritizes safety over privacy for younger users. https://therecord.media/openai-age-prediction-chatgpt-children-safety

🔒 Samsung patches zero-day security flaw used to hack into its customers' phones vulnerability – Samsung has patched a zero-day vulnerability that allowed hackers to remotely install malicious code on devices running Android 13 to 16, following a private alert from Meta and WhatsApp. https://techcrunch.com/2025/09/16/samsung-patches-zero-day-security-flaw-used-to-hack-into-its-customers-phones/

🔧 Apple addresses dozens of vulnerabilities in latest software for iPhones, iPads and Macs vulnerability – Apple's latest updates for iOS, iPadOS, and macOS patch multiple vulnerabilities, including some with potential root access, but no active exploits have been reported. Users can also update to earlier versions for critical patches. https://cyberscoop.com/apple-security-updates-september-2025/

⚖️ BreachForums founder resentenced to three years in prison cybercrime – Conor Brian Fitzpatrick, founder of the BreachForums cybercrime marketplace, was resentenced to three years in prison after a lenient initial sentence was overturned due to his lack of remorse and continued illegal activities. https://cyberscoop.com/conor-fitzpatrick-pompompurin-resetenced-breachforums/

🖥️ Consumer Reports asks Microsoft to keep supporting Windows 10 security news – Consumer Reports has urged Microsoft to continue supporting Windows 10, highlighting concerns about user security and compatibility as the transition to Windows 11 proceeds. https://www.theverge.com/news/779079/consumer-reports-windows-10-extended-support-microsoft

📰 Russian fake-news network back in action with 200+ new sites security news – A Russian troll farm has launched over 200 new fake news websites using AI to generate content, aiming to influence political discourse in multiple countries, including the US and Canada. https://www.theregister.com/2025/09/18/russian_fakenews_network/

🔒 10585 is the sixth actively exploited Chrome zero vulnerability – Google patched four vulnerabilities in Chrome, including the actively exploited zero-day CVE-2025-10585, a type confusion issue in the V8 engine, marking the sixth such vulnerability in 2025. https://securityaffairs.com/182322/uncategorized/cve-2025-10585-is-the-sixth-actively-exploited-chrome-zero-day-patched-by-google-in-2025.html

🛠️ Open-Source Tool Greenshot Hit by Severe Code Execution Vulnerability vulnerability – A critical vulnerability in Greenshot allows arbitrary code execution due to improper data handling, risking exploitation by local attackers. Users are urged to update to version 1.3.301 to mitigate the issue. https://thecyberexpress.com/greenshot-vulnerability/

📚 Librarians Are Being Asked to Find AI-Hallucinated Books security news – Librarians report increasing patron requests for non-existent books generated by AI, leading to confusion and diminished trust in information sources. The impact of generative AI on libraries raises concerns about information literacy and the quality of resources. https://www.404media.co/librarians-are-being-asked-to-find-ai-hallucinated-books/

🚆 ‘Scattered Spider’ teens charged over London transportation hack cybercrime – Two teenagers from the 'Scattered Spider' group have been charged in connection with a cyberattack that disrupted London's transportation systems, highlighting growing concerns about youth involvement in cybercrime. https://www.theverge.com/news/781039/scattered-spider-teens-charged-tfl-london-hack

✈️ Russia's main airport in St. Petersburg says its website was hacked security news – Pulkovo Airport in St. Petersburg experienced a cyberattack that took its website offline, although flight operations remained unaffected. This follows other disruptions in Russia's aviation sector amid rising cyberattacks since the Ukraine invasion. https://therecord.media/russia-pulkovo-airport-st-petersburg-website-hacked

👶 Watchdog finds MrBeast improperly collected children’s data privacy – The Children’s Advertising Review Unit found that YouTuber MrBeast collected children's data without parental consent, violating COPPA guidelines. He has since updated his data collection practices in response to the findings. https://therecord.media/watchdog-mrbeast-youtube-privacy-colection

🚗 JLR Cyberattack Becomes UK National Crisis cybercrime – The Jaguar Land Rover cyberattack has halted production, affecting over 200,000 workers and prompting government discussions for support. The incident, attributed to the Scattered Lapsus$ Hunters group, is causing significant financial losses. https://thecyberexpress.com/jlr-cyberattack-becomes-uk-national-crisis/

✈️ Hundreds of flights delayed at Heathrow and other airports after apparent cyberattack security news – A cyber-related incident involving Collins Aerospace led to significant flight delays at major European airports, including Heathrow, as airlines reverted to manual check-ins. Travelers are advised to arrive earlier for flights. https://techcrunch.com/2025/09/21/hundreds-of-flights-delayed-at-heathrow-and-other-airports-after-apparent-cyberattack/

🚨 T-1 month: Exchange Server 2016 and Exchange Server 2019 End of Support security news – Exchange Server 2016 and 2019 reach end of support on October 14, 2025, risking security vulnerabilities without updates. Users are urged to upgrade or migrate to Exchange Online. https://techcommunity.microsoft.com/blog/exchange/t-1-month-exchange-server-2016-and-exchange-server-2019-end-of-support/4453133

🕵️♂️ One Token to rule them all – obtaining Global Admin in every Entra ID tenant via Actor tokens vulnerability – A critical vulnerability in Entra ID allows attackers to impersonate Global Admins across tenants using undocumented Actor tokens. Microsoft swiftly fixed the issue, but risks remain. https://dirkjanm.io/obtaining-global-admin-in-every-entra-id-tenant-with-actor-tokens/

💨 Hosting a WebSite on a Disposable Vape hacking write-up – An innovative project explores hosting a web server on a disposable vape's microcontroller, achieving surprisingly fast response times despite its limited specs. A humorous take on tech recycling! https://bogdanthegeek.github.io/blog/projects/vapeserver/

🔓 Windows Local Privilege Escalation through the bitpixie Vulnerability vulnerability – The bitpixie vulnerability allows attackers to bypass BitLocker encryption via a downgrade attack on Windows Boot Manager, risking unauthorized access. A Microsoft patch is available to mitigate this risk. https://blog.syss.com/posts/bitpixie/

🚨 China Imposes One-Hour Reporting Rule for Major Cybersecurity Incidents security news – China's new regulations mandate reporting severe cybersecurity incidents within one hour, enhancing enforcement following high-profile data breaches. Proposed law amendments suggest stricter penalties for non-compliance. https://thecyberexpress.com/china-cybersecurity-incident-reporting/

🛡️ Google Online Security Blog: Supporting Rowhammer research to protect the DRAM ecosystem security research – Google supports research on Rowhammer vulnerabilities in DRAM, leading to the development of test platforms and new attack patterns that expose weaknesses in existing mitigations, necessitating further improvements. http://security.googleblog.com/2025/09/supporting-rowhammer-research-to.html

🐍 Replicating Worm Hits 180+ Software Packages – Krebs on Security cybercrime – The Shai-Hulud worm has infected over 180 NPM packages, stealing credentials and publishing them on GitHub. It self-replicates, raising concerns over supply chain security in software development. https://krebsonsecurity.com/2025/09/self-replicating-worm-hits-180-software-packages/

🚫 Microsoft, Cloudflare shut down RaccoonO365 phishing domains cyber defense – Microsoft seized 338 domains linked to the RaccoonO365 phishing operation, led by Joshua Ogundipe, which sold phishing kits that compromised Microsoft 365 credentials. The takedown disrupts a major tool used by cybercriminals. https://www.theregister.com/2025/09/16/microsoft_cloudflare_shut_down_raccoono365/

💻 HybridPetya: The Petya/NotPetya copycat comes with a twist malware – ESET has identified a new ransomware called HybridPetya, which mimics NotPetya but can also compromise UEFI systems and exploit CVE‑2024‑7344 to bypass UEFI Secure Boot. It's not currently spreading in the wild. https://www.welivesecurity.com/en/videos/hybridpetya-petya-notpetya-copycat-twist/

🔓 Attack on SonicWall’s cloud portal exposes customers’ firewall configurations data breach – SonicWall confirmed a breach of its MySonicWall.com platform, exposing firewall configuration files of less than 5% of its customers. The incident highlights systemic security issues within the vendor's operations. https://cyberscoop.com/sonicwall-cyberattack-customer-firewall-configurations/

⛈️ Cloudflare DDoSed itself with React useEffect hook blunder security news – Cloudflare experienced an outage due to a coding error involving a React useEffect hook, which caused excessive API calls and overloaded its Tenant Service API. The incident sparked discussions on the proper use of useEffect in development. https://www.theregister.com/2025/09/18/cloudflare_ddosed_itself/

⚙️ SystemBC – Bringing the Noise security research – Lumen's Black Lotus Labs discovered the SystemBC botnet, leveraging over 80 C2s and primarily targeting VPS systems to create high-volume proxies for cybercriminal activities. The botnet is linked to various criminal groups and is being used alongside the REM Proxy service for malicious operations. https://blog.lumen.com/systembc-bringing-the-noise/

🔒 CISA Warns of New Malware Campaign Exploiting Ivanti EPMM Vulnerabilities vulnerability – CISA reports a malware campaign exploiting Ivanti EPMM vulnerabilities (CVE-2025-4427 and CVE-2025-4428), allowing unauthorized access and malware deployment. Organizations are urged to upgrade systems and implement security measures. https://thecyberexpress.com/cisa-mar-cve-2025-4427-28/

🔐 CVE-2025-10035: Critical Vulnerability in Fortra GoAnywhere MFT vulnerability – A critical vulnerability, CVE-2025-10035, has been identified in Fortra's GoAnywhere MFT software, potentially exposing sensitive data. Users are urged to apply patches immediately to mitigate risks. https://www.vulncheck.com/blog/cve-2025-10035-fortra-go-anywhere-mft

🤔 Future of CVE Program in limbo as CISA, board members debate path forward security news – The future of the CVE Program is under debate after a funding incident raised concerns about its management. CISA asserts its leadership role while board members advocate for a collaborative, globally-supported model. https://therecord.media/cve-program-future-limbo-cisa

⚙️ CISA Releases Eight Industrial Control Systems Advisories vulnerability – CISA has issued eight advisories addressing vulnerabilities in various Industrial Control Systems, including products from Siemens, Schneider Electric, and Hitachi Energy, urging users to review for mitigations. https://www.cisa.gov/news-events/alerts/2025/09/16/cisa-releases-eight-industrial-control-systems-advisories ⚙️ CISA Releases Nine Industrial Control Systems Advisories vulnerability – CISA has issued nine advisories addressing vulnerabilities in various Industrial Control Systems, including products from Westermo, Schneider Electric, and Hitachi Energy, urging users to review for mitigations. https://www.cisa.gov/news-events/alerts/2025/09/18/cisa-releases-nine-industrial-control-systems-advisories

While my intention is to pick news that everyone should know about, it still is what I think is significant, cool, fun... Most of the articles are in English, but some current warnings might be in German.

from 📰wrzlbrmpft's cyberlights💥

A weekly shortlist of cyber security highlights. The short summaries are AI generated! If something is wrong, please let me know!

🤞 We Got Lucky: The Supply Chain Disaster That Almost Happened No summary here, just a recommendation to read https://www.aikido.dev/blog/we-got-lucky-the-supply-chain-disaster-that-almost-happened

💾 Signal introduces free and paid backup plans for your chats security news – Signal now allows users to back up chats for free and offers a paid plan for full media backups. This enhances its value for secure messaging amid privacy concerns. https://techcrunch.com/2025/09/08/signal-introduces-free-and-paid-backup-plans-for-your-chats/

📺 Plex admits breach of account details, hashed passwords data breach – Plex has warned users to reset passwords after a breach potentially exposed emails, usernames, and hashed passwords. While credit card data wasn't compromised, this incident echoes previous breaches. https://www.theregister.com/2025/09/09/plex_breach/

🏋️♂️ Call audio from gym members, employees in open database data breach – An unprotected AWS database exposed sensitive audio recordings of gym members discussing personal and financial information. This raises concerns about potential identity theft and social engineering attacks. https://www.theregister.com/2025/09/09/gym_audio_recordings_exposed/

🔒 Apple says the iPhone 17 comes with a massive security upgrade security news – Apple's iPhone 17 features Memory Integrity Enforcement, an always-on security measure aimed at complicating spyware development, enhancing user protection. https://www.theverge.com/news/775234/iphone-17-air-a19-memory-integrity-enforcement-mte-security

📱 Nepal lifts social media ban after deadly youth protests security news – Nepal has lifted a ban on social media platforms following violent protests that resulted in 29 deaths. The government faced criticism for the ban, deemed digital repression by rights groups. https://therecord.media/nepal-social-media-ban-lifted-after-deadly-protests

🚗 Jaguar Land Rover says data stolen in disruptive cyberattack data breach – Jaguar Land Rover reported a cyberattack that resulted in data theft and halted vehicle assembly lines. The extent of the stolen data and its impact on employees or customers remains unclear. https://techcrunch.com/2025/09/10/jaguar-land-rover-says-data-stolen-in-disruptive-cyberattack/

🖼️ Google Online Security Blog: How Pixel and Android are bringing a new level of trust to your images with C2PA Content Credentials security news – Google's Pixel and Android devices now utilize C2PA Content Credentials to enhance image authenticity, providing users with verifiable trust in their images and combating misinformation. http://security.googleblog.com/2025/09/pixel-android-trusted-images-c2pa-content-credentials.html

🔐 Brussels faces privacy crossroads over encryption backdoors privacy – Europe debates legislation requiring scanning of user content for child abuse, raising concerns over privacy and security. Critics argue it could lead to false accusations and a significant erosion of digital rights. https://www.theregister.com/2025/09/11/eu_chat_control/

💻 Kids in the UK are hacking their own schools for dares and notoriety cybercrime – The ICO reports that over half of personal data breaches in UK schools are caused by students, often through weak passwords and lax security practices. https://techcrunch.com/2025/09/11/kids-in-the-uk-are-hacking-their-own-schools-for-dares-and-notoriety/

🛡️ FTC opens inquiry into how AI chatbots impact child safety, privacy privacy – The FTC is investigating how major tech companies protect children using AI chatbots, focusing on safety measures and privacy practices. This follows concerns over negative impacts, including a tragic suicide case linked to a chatbot. https://therecord.media/ftc-opens-inquiry-ai-chatbots-kids

⚠️ Apple issues spyware warnings as CERT warning – Apple has issued alerts about a spyware campaign affecting iCloud-linked devices, confirmed by France's CERT-FR. Notifications indicate potential compromises, often involving sophisticated attacks with zero-day vulnerabilities. https://securityaffairs.com/182129/malware/apple-issues-spyware-warnings-as-cert-fr-confirms-attacks.html

🔒 Swiss government looks to undercut privacy tech, stoking fears of mass surveillance privacy – The Swiss government plans to require service providers to collect IDs, retain user data for six months, and potentially disable encryption, raising concerns over mass surveillance and the impact on privacy tech companies. https://therecord.media/switzerland-digital-privacy-law-proton-privacy-surveillance

🔒 Samsung fixed actively exploited zero vulnerability – Samsung patched the CVE-2025-21043 zero-day vulnerability, allowing remote code execution on Android devices. The flaw was exploited in attacks without user interaction, raising concerns over security. https://securityaffairs.com/182135/hacking/samsung-fixed-actively-exploited-zero-day.html

⚖️ Hacker convicted of extorting 20,000 psychotherapy victims walks free during appeal cybercrime – Aleksanteri Kivimäki, convicted of extorting over 20,000 psychotherapy clients, was released on appeal while his case continues. The hack has deeply impacted Finnish society, with many victims still suffering. https://therecord.media/finland-vastaamo-hacker-free-during-appeal-conviction

🧺 Dutch students denied access to jailbroken laundry machines security news – Over 1,250 University of Amsterdam students are without laundry services after a cyberattack compromised smart machines, allowing free washing. Management company Duwo refuses to restore the service due to costs. https://www.theregister.com/2025/09/12/jailbroken_laundry_machines/

🔓 Vietnam, Panama governments suffer incidents leaking citizen data data breach – Vietnam's National Credit Information Center suffered a data breach, with hackers claiming to have stolen 160 million records. Meanwhile, Panama's Ministry of Economy and Finance reported a cyberattack, with the INC ransomware gang claiming to have stolen 1.5 terabytes of data. https://therecord.media/vietnam-cic-panama-finance-ministry-cyberattacks

🚆 British rail passengers urged to stay on guard after hack signals failure data breach – LNER warns passengers of a data breach involving a third-party supplier, exposing contact details and journey information. Customers are advised to be cautious of unsolicited communications, although no payment details were compromised. https://www.bitdefender.com/en-us/blog/hotforsecurity/british-rail-passengers-hack-signals-failure

🎢 Exploiting the Impossible: A Deep Dive into A Vulnerability Apple Deems Unexploitable vulnerability – A deep dive reveals a race condition in Apple's file-copy API that could be exploited, challenging Apple's belief that it was unexploitable. This vulnerability poses significant security risks. https://jhftss.github.io/Exploiting-the-Impossible/

🐱👤 Break The Protective Shell Of Windows Defender With The Folder Redirect Technique hacking write-up – This article details a method for exploiting Windows Defender's update mechanism through symbolic links, allowing attackers to control its execution folder and potentially disable the antivirus. https://www.zerosalarium.com/2025/09/Break-Protective-Shell-Windows-Defender-Folder-Redirect-Technique-Symlink.html

🔓 Hackers breached Salesloft ’s GitHub in March, and used stole tokens in a mass attack security news – Salesloft's GitHub was breached by hackers who stole tokens, leading to a mass attack on major clients like Google and Cloudflare. Security measures are now in place after a lengthy detection delay. https://securityaffairs.com/182002/hacking/hackers-breached-salesloft-s-github-in-march-and-used-stole-tokens-in-a-mass-attack.html

💻 18 Popular Code Packages Hacked, Rigged to Steal Crypto – Krebs on Security malware – Eighteen widely-used JavaScript packages were compromised to steal cryptocurrency after a developer was phished. Experts warn that such supply chain attacks could lead to more severe malware outbreaks. https://krebsonsecurity.com/2025/09/18-popular-code-packages-hacked-rigged-to-steal-crypto/

🕵️♂️ Detecting Active Directory Password-Spraying with a Honeypot Account cyber defense – This article outlines a method to detect password-spraying attacks using a honeypot account, reducing false positives by monitoring logon attempts specifically associated with this account. https://trustedsec.com/blog/detecting-password-spraying-with-a-honeypot-account

🔧 Zero Day Initiative — The September 2025 Security Update Review vulnerability – September updates include Adobe's fixes for 22 CVEs across various products, and Microsoft's 80 CVEs, featuring Critical vulnerabilities like remote code execution. No active exploitation noted. https://www.thezdi.com/blog/2025/9/9/the-september-2025-security-update-review

⚠️ SAP warns of high-severity vulnerabilities in multiple products vulnerability – SAP has identified multiple high-severity vulnerabilities, including a critical flaw rated 10 in NetWeaver, allowing unauthenticated command execution. Immediate patching is advised to prevent exploitation. https://arstechnica.com/security/2025/09/as-hackers-exploit-one-high-severity-sap-flaw-company-warns-of-3-more/

🎓 The State of Ransomware in Education 2025 security research – Sophos' study reveals evolving ransomware threats in education, highlighting phishing and exploited vulnerabilities as primary attack vectors. Recovery efforts decline while ransom demands and payments drop significantly, indicating growing resilience. https://news.sophos.com/en-us/2025/09/10/the-state-of-ransomware-in-education-2025/

😅 The npm incident frightened everyone, but ended up being nothing to fret about cybercrime – An npm account compromise led to malicious code injection in popular packages, causing initial panic. However, the attack's impact was minimal, quickly contained, and the community's response proved effective. https://cyberscoop.com/open-source-npm-package-attack/

🐛 ChillyHell modular macOS malware OKed by Apple in 2021 malware – ChillyHell, a modular macOS backdoor that passed Apple's notarization, has likely infected systems undetected since 2021. Its advanced features include multiple persistence methods and command-and-control protocols. https://www.theregister.com/2025/09/10/chillyhell_modular_macos_malware/

🔍 Do we invest too much in risk assessments and too little in security? cyber defense – Organizations should prioritize basic security controls over risk assessments. A structured approach involves establishing foundational security, followed by managed capabilities and risk-based enhancements to improve overall security posture. https://safecontrols.blog/2025/09/10/do-we-invest-too-much-in-risk-assessments-and-too-little-in-security/

☁️ VMSCAPE Spectre vulnerability leaks cloud secrets vulnerability – ETH Zurich researchers have discovered the VMSCAPE vulnerability, a Spectre-based exploit that allows cloud users to leak secrets from the hypervisor on AMD and Intel CPUs without code changes. Software mitigations are required to address the issue. https://www.theregister.com/2025/09/11/vmscape_spectre_vulnerability/

🦠 SonicWall firewalls targeted by fresh Akira ransomware surge cybercrime – Researchers warn of a surge in Akira ransomware attacks exploiting a year-old vulnerability in SonicWall firewalls. Improper configurations and failure to reset passwords have exacerbated the issue, with multiple organizations affected. https://cyberscoop.com/sonicwall-akira-ransomware-attacks-surge/

💻 HybridPetya ransomware dodges UEFI Secure Boot malware – HybridPetya, a new ransomware strain, exploits a vulnerability to bypass UEFI Secure Boot on Windows systems. While currently a proof-of-concept, it demonstrates significant technical capabilities, including MFT encryption. https://www.theregister.com/2025/09/12/hopefully_just_a_poc_hybridpetya/

⚙️ CISA Releases Fourteen Industrial Control Systems Advisories vulnerability – CISA issued fourteen advisories detailing vulnerabilities in various Industrial Control Systems, including multiple Rockwell Automation products. Users are urged to review these for mitigation strategies. https://www.cisa.gov/news-events/alerts/2025/09/09/cisa-releases-fourteen-industrial-control-systems-advisories ⚙️ CISA Releases Eleven Industrial Control Systems Advisories vulnerability – CISA issued eleven advisories on September 11, 2025, detailing vulnerabilities in various ICS products, primarily from Siemens and Schneider Electric. Users are urged to review these advisories for mitigation strategies. https://www.cisa.gov/news-events/alerts/2025/09/11/cisa-releases-eleven-industrial-control-systems-advisories

⚠️ CISA Adds One Known Exploited Vulnerability to Catalog warning – CISA has added CVE-2025-5086, a deserialization vulnerability in Dassault Systèmes DELMIA Apriso, to its KEV Catalog due to active exploitation risks. Federal agencies are required to remediate identified vulnerabilities promptly. https://www.cisa.gov/news-events/alerts/2025/09/11/cisa-adds-one-known-exploited-vulnerability-catalog

While my intention is to pick news that everyone should know about, it still is what I think is significant, cool, fun... Most of the articles are in English, but some current warnings might be in German.

from Sirius

Outro dia desses estava lendo “homo bolsonarus” do Renato Lessa (disponibilizado de graça na rede) e uma das coisas que acho instigante no texto é a concepção de que o bolsonarismo é uma instituição.

Outro dia desses estava lendo “homo bolsonarus” do Renato Lessa (disponibilizado de graça na rede) e uma das coisas que acho instigante no texto é a concepção de que o bolsonarismo é uma instituição.

Lessa fala sobre como Hobbes observou a capacidade humana de produzir “animais artificiais”, que são as instituições.

Nem todo animal artificial que criamos, por certo, precisa ser necessariamente uma besta perversa. Também é possível ver uma luta entre estes constructos animais (mas não no estilo duelo Pokemon). Inclusive estamos assistindo na atualidade as instituições oficiais do Judiciário e PGR (antes leniente sob o comando de Augusto Aras) combatendo a besta bolsonarista.

Mas deixando de lado a atenção principal do texto do professor, o bolsonarismo, o que mais gostei foi a dica final, de que podemos criar outras instituições! E acrescento que nossos animais artificiais não necessitam ser estatais.

O Poder Central é expressivo, mas também é limitado, uma instituição precisa mesmo é ter um “discurso forte”, capaz de reunir as pessoas em torno de convicções e crenças sólidas.

Este fediverso, por exemplo, é a infraestrutura de uma instituição, de um animal artificial que criamos. Não é estatal, não criamos um Leviatã, nem é um passarinho ou uma borboleta, de propriedade capitalista. Alguns de nós chamam de mastodonte, mas sabemos que é maior que isso.

Nesse caso específico, ademais, nós, que alimentamos nosso bichinho, precisamos tornar esse discurso mais forte para dar mais consistência às crenças que sustentam essa instituição (informação, diálogos, comunicação públicas, abertas, descentralizadas, etc.).

Quando o bolsonarismo alcançou o Poder Executivo (além de parcela relevante das cadeiras do Congresso) por certo ele ganhou mais força e foram quatro anos sofridos, com direito a uma pandemia mundial para a besta fazer mais vítimas…

Mas só conseguiu alcançar o executivo por ser uma instituição forte, independentemente do Poder Central, que canalizava as emoções e crenças de muitas pessoas!

O poder parcial conquistado com a eleição e ascensão ao executivo federal teve também um aspecto limitador. A estrutura de Separação dos Poderes fez a criatura guinchar de ódio e se queixar que o Judiciário não a deixava desgovernar em paz… Até mesmo o Legislativo exigia muitas emendas e não admitia todas as vontades da besta.

O que gostaria de ressaltar, contudo, é que estas experiências nos mostram que também podemos cristalizar nossas crenças em uma humanidade não alienada, não oprimida, livre do jugo do poder financeiro e da vigilância do capitalismo atual, por meio de novos constructos animais!

Para tanto precisamos de união, cooperação, consenso, diálogo, respeito, técnica politica e, principalmente, praticar a experimentação e a criatividade.

Comente este humilde ensaio aqui

#instituições #fediverso #bolsonarismo

from লোকমানুষ এর ব্লগ

দুটি দরজা, একপাশে কান্নার আওয়াজ আর অন্যপাশে আনন্দের হর্ষধ্বনি। আর এদের মাঝে একটি রুম, নাম তার ‘ওয়েটিং রুম।’ যেখানে সময়ের ঘড়ি চলে ধীরগতিতে, অথচ হৃৎস্পন্দন পাগলা ঘোড়ার দ্রুতিতে ছোটে। জীবনের এই দেনা-পাওনার মঞ্চে হাসপাতাল হল সেই স্থান, যেখানে প্রতিটা মিনিটের আলাদা রঙ আছে, প্রতিটা শ্বাসের রয়েছে আলাদা অর্থ। চেয়ারে অপেক্ষমাণ মানুষগুলোকে দেখলে বোঝা যায়, অপেক্ষা কেমন করে তাদের উৎকণ্ঠাকে মুখভঙ্গিতে টেনে উঠিয়ে নিয়ে আসে। কারও চোখে ভয়ের কুয়াশা তো কারও ঠোঁটে নীরব প্রার্থনা, কারও হাতে প্রেসক্রিপশন চেপে ধরা তো কারও চোখে-মুখে অদৃশ্য শূন্যতা। কোণায় কোণায় অ্যান্টিসেপটিকের গন্ধ, দেয়াল ঘড়ির টিকটক-টিকটক শব্দ, ব্যাস্ততায় মুখ ভরা এটেন্ডেন্ট; পুরোটা দেখলে মনে হয় কেউ যেন সময়কে ধীর করে এসবের মাঝে পেঁচিয়ে দিয়েছে। কারও কাছে পাঁচ মিনিট এখানে অসহ্য অনন্ত, আবার এই পাঁচ মিনিটই কারো কাছে জীবনের নতুন সূর্যোদয়ের অংশ।

এখানে ডাক্তার সাহেবের চেম্বারের দরজার দুই প্রান্তে দুইটি আলাদা জগৎ রয়েছে। চেম্বারের ভেতরে প্রবেশ করা রোগীটি মনে করে— ডাক্তার সাহেব কি ঠিকমতো আমাকে দেখছেন? এই যে দ্রুত কয়েকটা প্রশ্ন করে, দ্রুত কয়েকটা পরীক্ষা করাতে দিলেন, নাম না জানা কতোগুলো ঔষধের নাম লিখে দিলেন; এগুলোই কি যথেষ্ট? আবার বাইরে বসে থাকা মানুষটি ভাবে- একজনকে দেখতেই এত সময়! আমার সিরিয়াল কি আজ আসবে? একই সময়ে দুই বিপরীত প্রত্যাশা নিয়ে সবাই এখানে অপেক্ষা করে। কেবল নিজের সমস্যাটার বেলায় খুব মনোযোগ প্রত্যাশা করে, আর অন্যদের বেলায় কেবল বিরক্তির প্রকাশ করে। ওই একই মানুষ, একই ডাক্তার- শুধু দরজার দুই পাড়ে দাঁড়াতেই আমাদের চাওয়া-পাওয়া এখানে ভিন্নরূপ ধারণ করে।

প্রায়ই বলতে শোনা যায়, ডাক্তার সাহেব কেমন যেন উদাসীন, আমার পুরো কথা শুনলেন না বা সবকিছু জিজ্ঞাসা করলেন না, ভালোভাবে বুঝলেন না। তাহলে কী তারা উদাসীন?!! মোটেই না, বরং দিনের পর দিন একই রকম উদ্বেগ, একই রকম ব্যথা, একই ভঙ্গির উৎকণ্ঠা, একই রকম জরুরি অবস্থা সামলাতে সামলাতে তাঁদের মুখে হাসির বদলে নিয়ম বাসা বেঁধেছে। রুক্ষ বা রুষ্ট নয় বরং এটি দায়িত্ব পূরণে আত্মরক্ষার এক পাতলা আস্তরণ; যেন আবেগে ভেসে গিয়ে হাত কাঁপে না, সিদ্ধান্ত নিতে মনে যেন কোন দোদুল্যমান অবস্থার উদ্রেক না ঘটে। একই ঘটনা ঘটে নার্সদের ব্যাপারে, তাদের রুক্ষ আচরণ আর কঠিন ব্যবহার নিয়ে আমাদের অভিযোগের অন্ত নেই। অথচ নার্সরা যেই রুক্ষ আচরণটি করে, যে “রুটিন” মেনে চলে, সেটাই তো আপনার-আমার জন্যে নিরাপত্তার ব্যারিকেড। কখন ইনজেকশন, কখন স্যালাইন, কোন ওষুধ আগে, কার প্রেসার নেমে যাচ্ছে -এসব সমীকরণের হিসেব মেলাতে একটু ঘাড় নেড়ে সান্ত্বনার প্রকাশ কিংবা আপনার অন্যায়কে প্রশ্রয় না দিয়ে তারা আপনাকেই প্রাধান্য দিচ্ছে।

হাসপাতালের ওয়েটিং রুমে বসে কিছু সময় ব্যয় করলে জীবনকে অন্য আলোয় দেখার সুযোগ পাওয়া যায়। পাশের চেয়ারে হয়ত নতুন বাবার কাঁধে লেপটে থাকা খুশি; একটু দূরে স্ট্রেচারে শুয়ে থাকা এক বৃদ্ধার নিঃশব্দের টানা শ্বাস; কেউ চুপচাপ তসবিহ গুনছেন, কেউ ফোনে টাকার বন্দোবস্ত করছেন, কেউ রিপোর্টের শিরোনাম ধরে ধরে গুগল করা শব্দের মানে খুঁজছেন। এইসব দৃশ্যের ভিড়ে বোঝা যায়- আমাদের ব্যক্তিগত ঝড় গুলো পৃথিবীর সবচেয়ে বড় জলোচ্ছাস নয়। বরং আজ যে যন্ত্রণায় বুক ফেটে যাচ্ছে, কালই হয়ত বুঝব আমার সেই কষ্টটা আসলে তেড়ে আসা সমুদ্র নয়, বরং সেটি ছিল সমুদ্রতীরের কেবলই ছোট্ট একটা ঢেউ।

আমরা মানুষেরা খুবই ‘আপন কেন্দ্রিক’। নিজের বেলায় পরিপূর্ণ আকর্ষণ কামনা করি, আর পরের বেলায় খানিকটা আকর্ষণেও বিরক্তি প্রকাশ করি। অথচ হসপিটালের এই একটি কাঠামোতেই হাজার হাজার গল্প একসঙ্গে ঘটে। এখানে কারও একজনের বিলম্ব অন্য কারও বেঁচে উঠার কারণ হয়ে উঠে। এখানে একজনের একটু অপেক্ষা অন্য কারও জন্যে জীবনের দিকে এগিয়ে যাওয়া সিঁড়ির একটি ধাপ হয়ে উঠে। এখানে সময় সমান্তরাল লাইন না হয়ে বিপ-বিপ শব্দ তোলা বাঁকা-তেড়া সাইন হয়ে উঠে। কপালের চিন্তার ভাজ আর গালে খুশির টোল এখানে একইসঙ্গে ঘটতে থাকে।

শিষ্টতা, সহানুভূতি, সম্মান আর দায়বদ্ধতার এক দারুণ হারমোনি দেখা যায় হাসপাতালগুলোতে। নিজের ব্যক্তিগত বিরক্তি ঢেকে ডাক্তার সাহেব হাসিমুখে আপনার সমস্যাটি যখন জানতে চায়, তখন প্রকাশ পায় তার শিষ্টতা, রাত তিনটার ডিউটিতে বারো ঘণ্টা পেরিয়ে যাওয়া নার্সের হাত যখন নিখুঁতভাবে ক্যানোলা বসায়, সেটাই তার সহানুভূতি; দীর্ঘ লাইনের মাঝেও যখন ডাক্তার প্রয়োজনের অতিরিক্ত কোনো পরীক্ষা লেখেন না, সেটাই তার দায়বদ্ধতা; ডায়াগনোসিস ঠিক রাখতে যখন তিনি আপনাকে পঁচিশটা প্রশ্নের বদলে সঠিক পাঁচটা প্রশ্ন করেন, সেটাই আপনার প্রতি দেখানো তার সম্মান। এই বিষয়গুলো অনুধাবনের, অনুকরণের। মমতা সবসময় হাসি দিয়ে নয়, অনেকক্ষেত্রে নীরব নির্ভুলতার মাধ্যমে প্রকাশ পেয়ে থাকে।

তবুও অভিযোগ থাকবে; হাসপাতাল মানেই কাগজপত্র, বিল, সিরিয়াল, ভিড়, হুলস্থূল, দিশেহারা অবস্থা। আমরা চাই মানবিকতা; সিস্টেম চায় শৃঙ্খলা। এই দুইয়ের মাঝখানে একটা সেতুবন্ধন প্রয়োজন। প্রয়োজন অপেক্ষার প্রতি সম্মান এবং সেবার প্রতি আস্থা। আমরা যদি প্রয়োজন আর প্রশ্নগুলোকে ভাগ করতে পারি, জিজ্ঞাসা আর চাহিদাকে সময়ের গণ্ডিতে সীমাবদ্ধ করতে পারি তাহলেই ব্যাপারগুলো সহজ হয়ে উঠে। আর অন্যদিকে কাউন্টারের অপরপাশ থেকে কেউ যখন বলবে- “একটু অপেক্ষা করুন, আপনারটা আমরা দেখছি।” তখন অপেক্ষার ভারী বাতাস হালকা হয়ে উঠে। আপনি-আমি, ডাক্তার-নার্স – সবাই মিলে চাইলেই এই সেতুটি বানাতে পারি।

সবশেষে, ওয়েটিং রুম থেকে বেরিয়ে যখন করিডর ধরে এগোতে শুরু করবেন, তখন একবার পিছনে তাকান। দেখবেন, আপনার ঠিক পরেই আরেকটি গল্প সেই একই দরজার সামনে এসে দাঁড়িয়েছে। কেউ হয়ত ফোনকলে নিজের আনন্দটা প্রকাশ করছে; কেউ চুপচাপ বসে কাঁদছে; কেউ একে অপরের কাঁধে হাত রেখে সান্ত্বনা দিচ্ছে। এই ধারাবাহিকতার নামই তো জীবন। কেউ হারায়, কেউ পায়; কেউ শিখে নেয় স্থির হতে, কেউ পায় কৃতজ্ঞ হওয়ার মন্ত্রণা।

সময়কে মানতে আর মানুষকে বুঝতে শেখায় হসপিটাল। সময়কে মানলে অপেক্ষা সহনীয় হয়; মানুষকে বুঝলে অভিযোগ হয় সংক্ষিপ্ত। পরের বার আপনি যখন ওয়েটিং রুমে বসবেন, বিরক্তিটাকে মন থেকে ঝেড়ে ফেলবেন। গভীর একটি শ্বাস নেবেন, আর মনে মনে বলবেন—”এটা কেবল একটি জার্নি, ধৈর্য আর অপেক্ষাই আমাকে পৌঁছে দেবে গন্তব্যে।” দেখা যাবে, একই ঘড়ির কাটাও তখন একটু টিক-টক শব্দ করে এগিয়ে যাবে, কিন্তু এবার সেই সুরটি বিরক্তির বদলে কোমলতা ছড়িয়ে দিবে।

ওয়েটিং রুমের দরজা পেরিয়ে আমরা আবারও ফিরে যাবো নিজ-নিজ জীবনে। পেছনে থাকবে কর্মব্যস্ত হসপিটাল আর তার ওয়েটিং রুম। তবে ওয়েটিং রুমের সেই শিক্ষাটাও মনে রাখতে হবে। মনে রাখতে হবে- পৃথিবীর সবচেয়ে গুরুত্বপূর্ণ কাজ কখনও কখনও ধৈর্য ধরে অপেক্ষা করা। আরও মনে রাখতে হবে- কোথাও কেউ একজন, এখনো আমাদের জন্যে নিষ্ঠার সাথে কাজ করে যাচ্ছে। প্রয়োজন কেবল একটু ধৈর্য, একটু আস্থার। বাকি পথ সময় নিজ দায়িত্বে হাঁটিয়ে নিয়ে যাবে।

from 📰wrzlbrmpft's cyberlights💥

A weekly shortlist of cyber security highlights. The short summaries are AI generated! If something is wrong, please let me know!

🤖 Microsoft launches Copilot AI function in Excel, but warns not to use it in 'any task requiring accuracy or reproducibility' security news – Microsoft's new Copilot AI for Excel simplifies formula generation but raises concerns about accuracy and privacy, warning against use in critical tasks. https://www.pcgamer.com/software/ai/microsoft-launches-copilot-ai-function-in-excel-but-warns-not-to-use-it-in-any-task-requiring-accuracy-or-reproducibility/

🔑 CERT.at Ewig ruft das Passwort warning – The article discusses the persistent reliance on passwords, their vulnerabilities, and the importance of robust security measures, including monitoring leaks and implementing two-factor authentication. https://www.cert.at/de/blog/2025/8/ewig-ruft-das-passwort

🏨 Attackers Target Hotelier Accounts in Malvertising and Phishing Campaign cybercrime – A phishing campaign impersonating hotel service providers uses malvertising to harvest credentials and bypass MFA, targeting cloud-based property management systems and exploiting user trust. https://sec.okta.com/articles/2025/08/attackers-target-hotelier-accounts-in-broad-phishing-campaign/

📱 Malicious apps with +19M installs removed from Google Play because spreading Anatsa banking trojan and other malware malware – Experts discovered 77 malicious Android apps on Google Play, collectively installed over 19 million times, spreading the Anatsa banking trojan and other malware, highlighting significant risks for users. https://securityaffairs.com/181528/malware/malicious-apps-with-19m-installs-removed-from-google-play-because-spreading-anatsa-banking-trojan-and-other-malware.html

📷 CBP Had Access to More than 80,000 Flock AI Cameras Nationwide privacy – Customs and Border Protection accessed over 80,000 Flock ALPR cameras across the U.S., revealing extensive data-sharing practices with local police departments unaware of the collaboration. https://www.404media.co/cbp-had-access-to-more-than-80-000-flock-ai-cameras-nationwide/

🛒 Auchan discloses data breach: data of hundreds of thousands of customers exposed data breach – Auchan reported a data breach affecting hundreds of thousands of customers, exposing personal information linked to loyalty cards, while assuring that sensitive banking data was not compromised. https://securityaffairs.com/181556/data-breach/auchan-discloses-data-breach-data-of-hundreds-of-thousands-of-customers-exposed.html

🆔 FBI, Dutch cops seize fake ID marketplace that sold identity docs for $9 cybercrime – Authorities have shut down VerifTools, a major marketplace for fake IDs, which facilitated identity theft and fraud. The seizure is seen as a significant blow against online crime. https://www.theregister.com/2025/08/28/fbi_dutch_cops_seize_veriftools/

🤖 Not in my browser! Vivaldi capo doubles down on generative AI ban privacy – Vivaldi's CEO opposes integrating generative AI in browsers, arguing it threatens user control and web diversity. He emphasizes prioritizing human interaction over automated solutions. https://www.theregister.com/2025/08/28/vivaldi_capo_doubles_down_on/

🕵️♂️ TransUnion says hackers stole 4.4 million customers’ personal information data breach – TransUnion has revealed a breach affecting 4.4 million customers, with sensitive data including names and Social Security numbers compromised. The company provides little clarity on the incident. https://techcrunch.com/2025/08/28/transunion-says-hackers-stole-4-4-million-customers-personal-information/

🚗 Security researcher maps hundreds of TeslaMate servers spilling Tesla vehicle data security research – A security researcher discovered over 1,300 publicly exposed TeslaMate servers leaking sensitive vehicle data, urging users to secure their dashboards to prevent unauthorized access. https://techcrunch.com/2025/08/26/security-researcher-maps-hundreds-of-teslamate-servers-spilling-tesla-vehicle-data/

🤦 OpenAI admits ChatGPT safeguards fail during extended conversations security news – OpenAI acknowledged failures in ChatGPT's safety measures during long conversations, which may lead to harmful guidance, following a lawsuit linked to a user's suicide after extensive interactions with the AI. https://arstechnica.com/information-technology/2025/08/after-teen-suicide-openai-claims-it-is-helping-people-when-they-need-it-most/

🔒 DOGE uploaded live copy of Social Security database to 'vulnerable' cloud server, says whistleblower data breach – A whistleblower claims the Department of Government Efficiency uploaded sensitive Social Security data to a vulnerable cloud server, risking the personal information of millions of Americans. https://techcrunch.com/2025/08/26/doge-uploaded-live-copy-of-social-security-database-to-vulnerable-cloud-server-says-whistleblower/

📄 Hackers use fake NDAs to deliver malware to US manufacturers cybercrime – Hackers are targeting U.S. manufacturers by using website contact forms to deliver malware disguised as non-disclosure agreements, maintaining engagement to appear credible and leveraging legitimate cloud services. https://therecord.media/hackers-fake-ndas-malware

🚴♂️ Developer Unlocks Newly Enshittified Echelon Exercise Bikes But Can't Legally Release His Software security news – An app developer jailbroke Echelon exercise bikes to restore offline functionality after a controversial firmware update, but copyright laws prevent him from legally sharing the software. https://www.404media.co/developer-unlocks-newly-enshittified-echelon-exercise-bikes-but-cant-legally-release-his-software/

💰 Euro banks block 'unauthorized' PayPal direct debits cybercrime – German banks froze billions in PayPal transactions due to unauthorized direct debits linked to a fraud-detection failure, impacting transactions primarily in Germany, though PayPal claims the issue is resolved. https://www.theregister.com/2025/08/28/euro_banks_block_paypal_direct_debits/

🛡️ 200 Swedish municipalities impacted by a major cyberattack on IT provider cybercrime – A cyberattack on Miljödata disrupted services across over 200 Swedish municipalities, raising concerns about stolen sensitive data and leading to a police investigation and reports of extortion. https://securityaffairs.com/181668/security/200-swedish-municipalities-impacted-by-a-major-cyberattack-on-it-provider.html

🎰 Affiliates Flock to ‘Soulless’ Scam Gambling Machine – Krebs on Security cybercrime – A new Russian affiliate program, Gambler Panel, has led to the rise of scam gambling sites that lure users with fake promotions and steal cryptocurrency deposits, operating under the guise of legitimate gaming. https://krebsonsecurity.com/2025/08/affiliates-flock-to-soulless-scam-gambling-machine/

🔒 WhatsApp fixes 'zero-click' bug used to hack Apple users with spyware vulnerability – WhatsApp addressed a zero-click vulnerability (CVE-2025-55177) in its iOS and Mac apps, exploited alongside an Apple flaw to stealthily hack targeted users' devices, allowing data theft without interaction. https://techcrunch.com/2025/08/29/whatsapp-fixes-zero-click-bug-used-to-hack-apple-users-with-spyware/

🎣 Phishing Emails Are Now Aimed at Users and AI Defenses security research – New phishing tactics not only deceive users but also target AI defenses with hidden prompts, complicating automated threat detection and increasing risks. https://malwr-analysis.com/2025/08/24/phishing-emails-are-now-aimed-at-users-and-ai-defenses/

🔥 Citrix forgot to tell you CVE-2025–6543 has been used as a zero day since May 2025 vulnerability – Citrix's CVE-2025–6543 vulnerability, exploited for remote code execution, has led to severe breaches in Netscaler systems, highlighting a lack of transparency and response from Citrix. https://doublepulsar.com/citrix-forgot-to-tell-you-cve-2025-6543-has-been-used-as-a-zero-day-since-may-2025-d76574e2dd2c

🐳 Docker fixes critical Desktop flaw allowing container escapes vulnerability – Docker patched a critical vulnerability (CVE-2025-9074) in Docker Desktop that allowed attackers to escape containers and access the Docker Engine API, risking host file access. https://securityaffairs.com/181545/security/docker-fixes-critical-desktop-flaw-allowing-container-escapes.html

🗣️ With AI chatbots, Big Tech is moving fast and breaking people privacy – AI chatbots are creating harmful feedback loops for vulnerable users, validating false beliefs and grandiose fantasies, leading to serious psychological risks and an urgent need for regulation and user education. https://arstechnica.com/information-technology/2025/08/with-ai-chatbots-big-tech-is-moving-fast-and-breaking-people/

🔓 Widespread Data Theft Targets Salesforce Instances via Salesloft Drift vulnerability – A data theft campaign exploited OAuth tokens in Salesloft Drift to access Salesforce customer data, prompting security measures and warnings for all users to review integrations and credentials. https://cloud.google.com/blog/topics/threat-intelligence/data-theft-salesforce-instances-via-salesloft-drift/

🕵️♂️ DSLRoot, Proxies, and the Threat of ‘Legal Botnets’ – Krebs on Security cybercrime – A Redditor's arrangement with DSLRoot, a residential proxy service, raises concerns about security risks, revealing the company's questionable origins and the emergence of 'legal botnets' exploiting residential connections. https://krebsonsecurity.com/2025/08/dslroot-proxies-and-the-threat-of-legal-botnets/

🔑 Goodbye Legacy MFA: Be Ready for the new Microsoft Authentication Methods Policy security news – Microsoft will retire legacy MFA and SSPR policies on September 30, 2025, transitioning to a unified Authentication Methods policy to enhance security and simplify management for organizations. https://www.guidepointsecurity.com/blog/goodbye-legacy-mfa-new-microsoft-authentication-methods-policy/

💻 First known AI-powered ransomware uncovered by ESET Research malware – ESET researchers discovered PromptLock, the first known AI-powered ransomware capable of exfiltrating and encrypting data, showcasing the potential for AI tools to enhance ransomware attacks. https://www.welivesecurity.com/en/ransomware/first-known-ai-powered-ransomware-uncovered-eset-research/

⚙️ Nx NPM packages poisoned in AI-assisted supply chain attack malware – Nx suffered a supply chain attack with malicious NPM packages that harvested developer credentials, exposing over 1,000 GitHub tokens and 20,000 files, utilizing AI tools for reconnaissance. https://www.theregister.com/2025/08/27/nx_npm_supply_chain_attack/

☎️ Experts warn of actively exploited FreePBX zero-day vulnerability – A serious zero-day vulnerability in FreePBX is being exploited, allowing unauthorized access to systems. Users are advised to update their software and restrict admin panel access. https://securityaffairs.com/181693/hacking/experts-warn-of-actively-exploited-freepbx-zero-day.html

🔒 Over 28,000 Citrix instances remain exposed to critical RCE flaw CVE vulnerability – More than 28,200 Citrix NetScaler instances are vulnerable to the critical RCE flaw CVE-2025-7775, which is actively exploited, prompting CISA to mandate fixes by August 28, 2025. https://securityaffairs.com/181614/hacking/over-28000-citrix-instances-remain-exposed-to-critical-rce-flaw-cve-2025-7775.html

🔑 Unpacking Passkeys Pwned: Possibly the most specious research in decades security research – SquareX's claim of a major vulnerability in passkeys, dubbed 'Passkeys Pwned,' misrepresents the FIDO spec and highlights risks from compromised devices rather than the security of passkeys themselves. https://arstechnica.com/security/2025/08/new-research-claiming-passkeys-can-be-stolen-is-pure-nonsense/

💻 Ransomware gang takedowns causing explosion of new, smaller groups cybercrime – The ransomware landscape is rapidly evolving, with over 40 new gangs emerging due to law enforcement actions against larger groups, leading to increased fragmentation and a rise in smaller, independent operations. https://therecord.media/ransomware-gang-takedown-proliferation

⚠️ CISA Adds Three Known Exploited Vulnerabilities to Catalog warning – CISA has included three new vulnerabilities in its KEV Catalog due to active exploitation, highlighting significant risks to federal networks and the need for prompt remediation. https://www.cisa.gov/news-events/alerts/2025/08/25/cisa-adds-three-known-exploited-vulnerabilities-catalog ⚠️ CISA Adds One Known Exploited Vulnerability to Catalog warning – CISA has added a new vulnerability, CVE-2025-7775, related to Citrix NetScaler, to its KEV Catalog, highlighting significant risks for federal networks and the need for prompt remediation. https://www.cisa.gov/news-events/alerts/2025/08/26/cisa-adds-one-known-exploited-vulnerability-catalog ⚠️ CISA Adds One Known Exploited Vulnerability to Catalog warning – CISA has added CVE-2025-57819, an authentication bypass vulnerability in Sangoma FreePBX, to its Known Exploited Vulnerabilities Catalog due to active exploitation. https://www.cisa.gov/news-events/alerts/2025/08/29/cisa-adds-one-known-exploited-vulnerability-catalog

⚙️ CISA Releases Three Industrial Control Systems Advisories vulnerability – CISA issued three advisories on security vulnerabilities in Industrial Control Systems, urging users to review for technical details and mitigation strategies. https://www.cisa.gov/news-events/alerts/2025/08/26/cisa-releases-three-industrial-control-systems-advisories ⚙️ CISA Releases Nine Industrial Control Systems Advisories vulnerability – CISA issued nine advisories on August 28, 2025, detailing vulnerabilities and exploits affecting various Industrial Control Systems, urging users to review for technical details and mitigation strategies. https://www.cisa.gov/news-events/alerts/2025/08/28/cisa-releases-nine-industrial-control-systems-advisories

🔍 Countering Chinese State-Sponsored Actors Compromise of Networks Worldwide to Feed Global Espionage System cybercrime – PRC state-sponsored cyber actors are targeting global networks, particularly in telecommunications and government sectors, employing sophisticated techniques to maintain long-term access and facilitate espionage, prompting a cybersecurity advisory from multiple agencies. https://www.cisa.gov/news-events/cybersecurity-advisories/aa25-239a

While my intention is to pick news that everyone should know about, it still is what I think is significant, cool, fun... Most of the articles are in English, but some current warnings might be in German.

from beverageNotes

Cracked open an Elijah Craig Barrel Proof batch A125. It's a 10 year, 7 month aged product weighing in at 118.2 proof.

Smell some cinnamon, oak, and hints of cardamom and amburana.

First sip, sans water or ice—which I'm sure I'll need!—, reminds me of amburana aged whiskies. Some leather. Didn't pick up a lot of strong flavors. The swallow is fire. Moving straight to some water! LOL.

After adding some filtered water, I pick up some caramel creme brûlée. The heat dies down, but still lingers. Smell of amburana has intensified—which may or may not be psychosomatic, I really like the smell. Next sips pick up some cherry. The finish seems to hint at some citrus. Still quite hot, so time to try out some ice.

Smells much sweeter after adding some ice... ...honey, maybe? Pick up more cherry with a “brighter” sip. The finish is reminiscent of a cab or a Barolo. Not the tannin bit.

An interesting dram. Off to just enjoy it.

Cheers!

from 📰wrzlbrmpft's cyberlights💥

A weekly shortlist of cyber security highlights. The short summaries are AI generated! If something is wrong, please let me know!

🚦 Dutch prosecution service attack keeps speed cameras offline cybercrime – A cyberattack on the Dutch Public Prosecution Service has left numerous speed cameras offline. While the attack didn't target the cameras directly, it hampers their reactivation due to system interconnectivity. https://www.theregister.com/2025/08/15/cyberattack_on_dutch_prosecution_service/

🎟️ Gefälschtes Gewinnspiel für Wiener Linien Jahreskarte im Umlauf warning – Fake Facebook posts are promoting a bogus contest for a Wiener Linien half-year ticket. The scam aims to steal credit card and personal information through a deceptive website. https://www.watchlist-internet.at/news/gefaelschtes-gewinnspiel-fuer-wiener-linien-jahreskarte-im-umlauf/

🔒 Multiple Vulnerabilities in Microsoft Products warning – Microsoft's August 2025 Patch Tuesday advisory addresses 111 security vulnerabilities, with 16 critical ones. Users are urged to update systems promptly, especially public-facing assets. https://cert.europa.eu/publications/security-advisories/2025-032/

🤖 Grok Exposes Underlying Prompts for Its AI Personas: ‘EVEN PUTTING THINGS IN YOUR ASS’ security research – Elon Musk's AI chatbot Grok has revealed prompts for its various personas, including a conspiracist character. This exposure raises concerns about the chatbot's design and potential influence on users. https://www.404media.co/grok-exposes-underlying-prompts-for-its-ai-personas-even-putting-things-in-your-ass/

🔓 HR giant Workday says hackers stole personal data in recent breach data breach – Workday confirmed a data breach involving the theft of personal information from a third-party database, raising concerns about potential social engineering scams. Details on affected individuals remain unclear. https://techcrunch.com/2025/08/18/hr-giant-workday-says-hackers-stole-personal-data-in-recent-breach/

🔐 Allianz Life data breach affects 1.1 million customers data breach – A data breach at Allianz Life has compromised the personal information of 1.1 million customers, including Social Security numbers. The breach is linked to the hacking group ShinyHunters. https://techcrunch.com/2025/08/18/allianz-life-data-breach-affects-1-1-million-customers/

🔑 UK drops demand for backdoor into Apple encryption privacy – The UK government has abandoned its demand for a backdoor into Apple’s encryption, potentially allowing Apple to restore Advanced Data Protection (ADP) iCloud encryption services in the UK. https://www.theverge.com/news/761240/uk-apple-us-encryption-back-door-demands-dropped

🚓 Speed cameras knocked out after cyber attack security news – A cyberattack on the Netherlands' Public Prosecution Service has rendered many speed cameras inoperable, impacting road safety and delaying legal proceedings as the organization remains offline. https://www.bitdefender.com/en-us/blog/hotforsecurity/speed-cameras-knocked-out-after-cyber-attack

🎤 Officials gain control of Rapper Bot DDoS botnet, charge lead developer and administrator cybercrime – Authorities have taken control of the powerful Rapper Bot DDoS botnet and charged its developer, Ethan Foltz, with aiding computer intrusions. The botnet conducted over 370,000 attacks worldwide since 2021. https://cyberscoop.com/rapper-bot-ddos-botnet-disrupted/

💊 Pharmaceutical firm Inotiv discloses ransomware attack. Qilin group claims responsibility for the hack data breach – Inotiv has reported a ransomware attack that encrypted systems and disrupted operations. The Qilin group claimed responsibility, alleging they stole 176GB of data from the firm. https://securityaffairs.com/181311/data-breach/pharmaceutical-firm-inotiv-discloses-ransomware-attack-qilin-group-claims-responsibility-for-the-hack.html

⚠️ Critical Chrome Flaw CVE‑2025‑9132 Exposes Browsers to Remote Code Execution vulnerability – A remote code execution flaw in Google Chrome, CVE-2025-9132, was discovered in the V8 JavaScript engine, allowing attackers to execute arbitrary code. Users are urged to update to version 139.0.7258.138 or later to mitigate risks. https://thecyberexpress.com/chrome-v8-vulnerability-cve%E2%80%912025%E2%80%919132/

🍔 McDonald's not lovin' it when hacker exposes rotten security security news – A white-hat hacker uncovered severe security flaws in McDonald's portals, enabling free food orders and access to sensitive data. The company has since made some fixes but still lacks a proper security disclosure process. https://www.theregister.com/2025/08/20/mcdonalds_terrible_security/

🤦♂Researcher Exposes Zero-Day Clickjacking Vulnerabilities in Major Password Managers vulnerability – A researcher revealed serious clickjacking vulnerabilities in popular password managers, enabling hackers to easily steal sensitive data if users visit malicious sites. Many remain unpatched. https://socket.dev/blog/password-manager-clickjacking

📞 Major Belgian telecom firm says cyberattack compromised data on 850,000 accounts data breach – Orange Belgium reported a cyberattack that compromised data from 850,000 customer accounts, including names and phone numbers. No critical data like passwords or financial details were hacked. https://therecord.media/belgian-telecom-says-cyberattack-compromised-data-on-850000

👓 Harvard dropouts to launch 'always on' AI smart glasses that listen and record every conversation privacy – Former Harvard students are launching Halo X, AI-powered smart glasses that record conversations and provide real-time information. Privacy advocates raise concerns about covert recording and consent laws. https://techcrunch.com/2025/08/20/harvard-dropouts-to-launch-always-on-ai-smart-glasses-that-listen-and-record-every-conversation/

📸 'Screenshot-grabbing' Chrome VPN extension still available privacy – The FreeVPN.One Chrome extension has been found capturing users' screenshots and sending them to a remote server without consent. Despite warnings, it remains available on the Chrome Web Store. https://www.theregister.com/2025/08/21/freevpn_privacy_research/